Resume of Patrick M. Slattery

My one page resume in PDF format

This PDF was created with Typst, a LaTeX like tool for typesetting. It's IAC for PDFs!

Resume in DOCX format

Expanded resume with detailed explanations of each point

MyWebGrocer / Mi9 Retail / Wynshop

I started working as a DevOps architect at MyWebGrocer in Winooski, Vermont in 2013.

I specialized in DevOps automation, source control management, and process improvement on an grocery eCommerce platform with yearly sales of over US $1 billion in the US, Canada, Ireland and Australia.

MyWebGrocer was sold to Mi9 Retail in 2018 and the non-grocery portion of the company was divested in 2023, the remaining grocery focused portion of the company became Wynshop Inc.

I'll work from the newest to the oldest items here:

Ephemeral self-hosted GitHub runners

- Implemented ephemeral self-hosted macOS and Linux build runners to reduce GitHub build costs by ~50%.

As our GitHub Enterprise organization scaled up we found we were quickly exhausting the default 50K minutes of time allocated to GitHub Actions each month. To resolve this we setup ephemeral self-hosted Ubuntu build runners on preemptible GKE nodes that scaled down to zero runners during periods of inactivity to reduce the cost of builds by at least 50%.

I also setup and fully documented ephemeral self-hosted macOS build runners using Packer, Tart and Tartelet to run iOS builds on.

IAC

- Implemented IAC for all possible systems, progressively using shell, Ansible, Terraform and Google Config Connector, managing just under 100 GKE clusters with up to 100 VM nodes each at peak.

Some of the first IAC defined systems I created were built from Docker containers andsystemdunit files deployed via Ansible.

When I was in the DMS team (2015 to 2019) I used OpenStack HEAT (On RackSpace) for system deployments and Ansible for the majority of application deployments.

When we migrated to Azure and later to GCP, we used a completely IAC defined Jenkins instance to perform deployments. This was a requirement from the development team as they wanted integration with their existing legacy TeamCity and Octopus Deploy CI/CD systems. We did prototype and demo FluxCD very early on in the migration to Kubernetes as a modern IAC solution but the R&D team wanted to stick with what they knew.

Eventually as TeamCity was increasingly obsolete and plagued by security bugs we migrated the R&D team to GitHub workflows for CI and ArgoCD for CD tasks.

For infrastructure deployments in GCP we initially used Google Cloud Deployment Manager but found it rather restrictive for our needs and ultimately built out a deployment pipeline using a mix of Terraform andgcloudshell scripting, asgcloudwas able to do a lot of things that Terraform couldn't at that time. Over time we tried CrossPlane and Google Config Connector and eventually settled on using Google Config Connector as our infrastructure IAC tool.

At peak we had just under 100 GKE clusters across three continents and eight Google regions that were all managed via IAC for both the infrastructure and the applications.

IAC driven Tailscale/Headscale VPN

- Evangelized a Tailscale/Headscale based VPN utilizing the modern WireGuard protocol to replace the prior ClickOps driven OpenVPN solution, saving $10K of OpenVPN licensing fees annually and providing a far simpler to use and more reliable VPN access solution.

The IT provided IPSec based VPN solution (OpenVPN) required considerable manual maintenance and was not well integrated into Google SSO. The DevOps team dogfooded a Kubernetes based Tailscale VPN using the Headscale control plane for Tailscale VPN which used the modern WireGuard protocol and after proving it out we rolled it out to the rest of the company.

While we did have some initial scalability issues and several people who didn't read the docs telling them to disable IPv6 first, overall it was a great success. Users generally felt it was much more streamlined and faster to use than OpenVPN.

This solution saved at least $10K in OpenVPN licensing fees annually and eliminated the regular manual maintenance of the OpenVPN VMs which freed up the time of the IT administrator.

This was again a technology that I had initially prototyped on my home network for personal use.

SVN to Git and Bitbucket to GitHub

- Migrated from SVN to Bitbucket Server and then on to an IAC defined set of GitHub orgs driven by Peribolos and GitHub Safe Settings.

When I first started at MyWebGrocer there was a single SVN instance on a Windows 2008 server with pretty much every imaginable thing setup wrong on it. I proceeded to setup a clean new SVN instance on a Linux VM and setup numerous hooks on it to enforce such rules as blocking commits of binaries, requiring a current SVN client version, and performing automatic backups per commit.

As mentioned above, when we started using Docker we purchased a Bitbucket Server license and I setup an on-prem Dockerized instance of it (with a staging instance as well for testing new releases). We eventually ended up with approximately 1000 repos on this instance of Bitbucket Server. We were using both Jenkins and TeamCity as on-prem CI systems with this server.

When the time came to migrate off the on-prem instance of Bitbucket Server we initially tried Bitbucket Cloud but found it to be extraordinarily limited in its security and the built-in CI Bitbucket Pipelines did not match up to what was available in GitLab or GitHub.

The extraordinarily slow adoption of new features into Bitbucket was also a major factor in our decision, for instance I filed the original request to Add support for CODEOWNERS in September 2017, two months after GitHub added that feature. It took Atlassian six years to add native support for CODEOWNERS in Bitbucket.

So we also tried out GitLab and GitHub and ultimately decided on GitHub.

We defined the GitHub Enterprise Cloud organizations as IAC via Peribolos for user and team management and GitHub Safe Settings for organization and repo defaults. This gave the DevOps team very tight control of who could access the GitHub organizations/repos and what settings they could change. We further enforced SSO login via Google and required 2FA login for all users.

We ended up migrating about 300 of the 1000 repos to GitHub and archiving the rest into a dedicated GitHub archive organization.

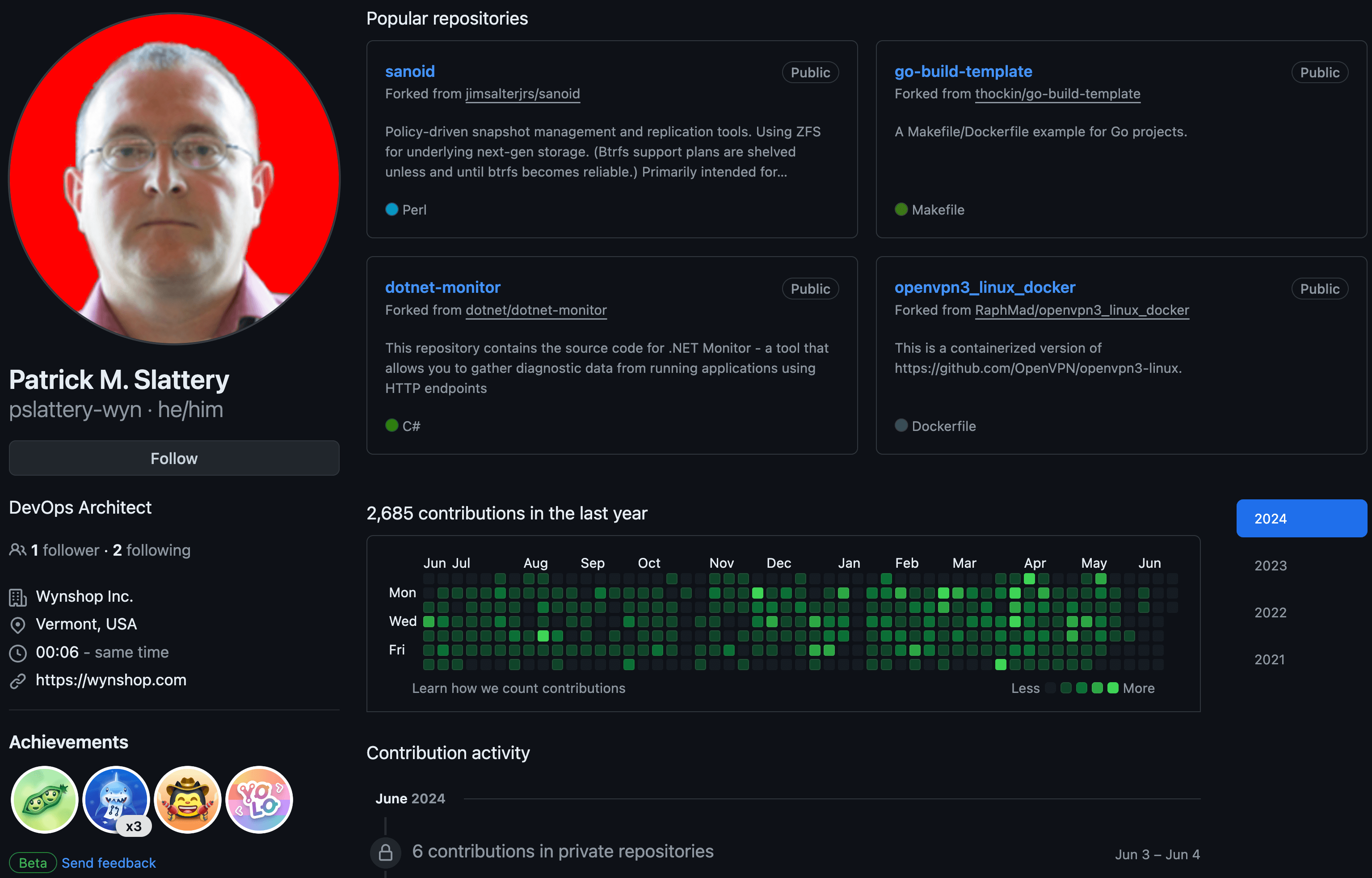

One thing I'd like to point out is that we enforced a strict GitHub user naming convention, where user names had to be in the format:first_initial_last_name-company_mnemonic

For instance my username was: pslattery-wyn

We also required the users full name be displayed and that a picture of their face be used for their avatar.

These requirements made it very clear to other users who was who in GitHub and made sure that no external users were inadvertently added. There were no Little Bobby Tables users in our system.

Four Golden Signals for GKE

- Got top scores on the Four Golden Signals for GKE from Google.

In 2023 Google provided us with the scores from a snapshot of a single average day of our utilization numbers. The metrics derived from the areas that the DevOps team managed (Cluster bin packing and discount coverage) were both scored in the Elite category.

Cluster bin packing measures the capacity of developers and platform admins to fully allocate the CPU and memory of each node through Pod placement.

Discount coverage measures the capacity of platform admins to leverage machines that offer significant discounts, such as Spot VMs, as well as the capacity of budget owners to take advantage of long-term continuous use discounts offered by cloud providers.

The Workload rightsizing and Demand based downscaling metrics were not controlled by our team and did not achieve high scores.

See also: State of Kubernetes Cost Optimization

Scale to zero for GKE

- Implemented automated scale to zero for non production GKE clusters on nights and weekends which saved 30%.

I implemented a shell script that ran from a Kubernetes cronjob to automate the scale down to zero nodes of the non-production GKE clusters on nights and weekends. This saved on average 30% for the clusters. You still have to pay for disks in the cloud even if the VMs using those disks are scaled to zero and disks were typically our single biggest line item.

The cronjob checked a Google calendar to see when it should be running, this way anyone with write access to the calendar could bring up the cluster as needed by adding an "appointment" to turn the cluster on. If there was no appointment listed for the cluster then the cluster was dehydrated.

We eventually replaced the cronjob/shell script with an operator written in Go that integrated with the BreakGlass application mentioned below and Google calendar to allow any user to bring up a cluster at will even if they did not have write access to the Google calendar.

BreakGlass

- Implemented a BreakGlass application to streamline developer access to environments while maintaining a full audit trail for SOC 2 compliance and reduced emergency pages to the cloud team by 90%.

In the early days of the DevOps team at Wynshop the software engineers would have to page one of the DevOps team to grant them access to a production environment if there was a production issue and they needed to get elevated access to the environment for troubleshooting.

As you can imagine with a new product this quickly became the number one issue that the DevOps team was paged for.

At the time we used ClickOps to manually assign and remove IAM permissions to the necessary engineers. Despite impeccable documentation on exactly what to do, there were times when sleepy engineers messed up at 3AM and either granted permissions to the wrong engineer or to the wrong environment or forgot to record the changes made to Jira for SOC audit tracking purposes, or sometimes the IAM permission was left in place long after the incident and simply forgotten about. I might even have been one of those sleepy engineers...

When Google added IAM conditions I quickly realized that this could be used to automate the granting and revocation of IAM permissions. I prototyped the concept with somegcloudshell functions and determined that it would suit our purposes and then we set about building a web app in Go that used Google SSO to validate the user and then asked them to pick a specific task that they wanted to accomplish such as port forwarding to a GKE cluster or getting write access to a storage bucket.

The user would then be asked how long they needed access for (with a max of 24 hours), what environment they wanted access to and finally they would be prompted for both a reason for the access request (for the audit trail) and a Jira issue ID.

The BreakGlass app would then invoke a conditional IAM API request and the user would be automatically granted access within seconds.

A log was written to a private chat channel so the DevOps team could see who was requesting access and why, a JSON log file was also written to a Google Storage Bucket in WORM mode so that no-one could edit or delete the logs.

BreakGlass reduced off hours pages to the DevOps team by about 95% and lead to much better sleep patterns for the DevOps team and overall much happier engineers on both sides.

And we aced our SOC audits too since the BreakGlass tool automatically recorded everything necessary for the audit trail.

Documentation

- Created extensive documentation on all aspects of deploying and maintaining MyWebGrocer/Wynshop solutions. Provided best practices guidance for software engineers and documented solutions to their frequently asked questions.

I'm a great believer that good documentation makes for good work. In my unwritten rules of DevOps I say:

Documentation should be written such that any other member of the team can perform the task solely from the documentation.

and:

The answer to any question that is asked twice should be documented.

I also believe that virtually all documentation should be available to all members of the company and that only permission restrictions should act to block the users from acting upon on that documentation.

When I was at GE Healthcare we had a fantastic technical writer on our team, I could hand him a stack of screenshots and he would have an initial usable document the next day. We did typically have to further refine the document to clarify some details, in one case doing approximately 50 revisions of the document, it was a clustering guide for Microsoft SQL Server with our radiology application. In summer 2018, five years after I left GE Healthcare, I met a former co-worker at a friends funeral and he mentioned that they still used that document as it was, in his words, "perfect". I can only aspire for every document to be that good.

Something that is also very important with technical documentation is that it gets reviewed and updated on a periodic basis. Technology changes fast and your internal process documentation needs to keep up with it. When documentation reaches its expected end of life I do not believe in deleting it, instead it should be clearly marked as deprecated and moved into a folder where it can be referenced if such a need ever arises.

Unfortunately you can't always make someone read the documentation... That's currently beyond my powers.

See also: The unwritten rules of DevOps

Validation procedures

-

Developed procedures to validate microservices for production readiness.

I developed and documented procedures to validate microservices for production readiness. These procedures verified that each new microservice had:- A valid Helm chart and values with no deprecated Kubernetes APIs in use.

- Valid container images.

- Valid infrastructure dependancies declared and working health and readiness checks.

- Valid logging in JSON format.

- Valid documentation for the microservice.

Once all of these checks had been passed we enabled provisioning of the service on the desired GKE clusters.

Naming conventions

- Created and more importantly enforced naming conventions for Google Cloud and GitHub

The old CS joke goes: There are only two hard things in Computer Science: cache invalidation and naming things.

Thankfully I've never had to work on cache invalidation but naming things has been a constant over the years.

When we decided to move to Google Cloud in 2020 one of the first tasks was to decide upon a naming scheme. The naming scheme itself is not the big thing here in my opinion, it's the constant policing and enforcement of that naming scheme that makes it actually useful. And that was something that I personally ensured was consistently enforced.

The core of the naming convention was a Git repo with a YAML file listing the customer names and their mnemonic.

Each environment type was also defined in this YAML.

Ultimately this consistent and well documented naming schema made it easy for developers and support to find the right resource amongst the hundreds of projects we had.

See also: GCP object naming

Security

- Advocated for enhanced security via physical security keys

YubiKeys

When we migrated to Google Cloud I persuaded the company to purchase some YubiKeys. I had been using a pair of YubiKeys personally for some years and knew how valuable they were in achieving phishing proof security. The fact that every employee at Google who interacted with us during the onboarding period used security keys was a great indicator that Google saw their value for every user. When Google Advanced Protection Program became available I made sure that all members of the DevOps team were enrolled in this program. We also secured our GitHub accounts with the YubiKeys.

Preemptible nodes in GKE

- Implemented preemptible nodes in GKE for every internal app that could possibly use them to reduce VM costs by greater than 70%.

We implemented preemptible nodes in GKE pretty much from day one for the internal Wynshop apps that had at least 2 replicas running by default (which was the vast majority of services). This was true of both production and non-production clusters. Any Statefulsets had to run on regular GKE nodes in production. We ran all non-production Statefulsets on Spot nodes as soon as they became available.

Because preemptible nodes are at least 70% cheaper then standard nodes we also saved considerably on CPU and RAM prices by running about 60% of our total workload on the preemptible nodes.

We ran thegke-preemptible-killerservice so that we automatically started killing off preemptible nodes before they ever aged out (they run for a maximum of 24 hours). This lead to a natural chaos monkey effect as at least one node was being killed off every hour in a typical cluster.

In the unusual case where Google ran out of preemptible VMs we did provision an autoscaled from zero node pool with standard nodes. This node pool would only scale up if there were no more preemptible or spot nodes available. While it cost considerably more to run these nodes it was the safest solution to ensure maximum uptime without the need for human intervention.

RELK (Redis Elasticsearch Logstash Kibana)

- RELK (Redis Elasticsearch Logstash Kibana) Initially a project I created for my 2015 summer intern, to parse and analyze our weblogs, this spread to analyzing all system activity logs company wide until we moved to Google.

This was originally a proof of concept project that I assigned to one of our summer interns in 2015 after noticing that he was looking a little bored. After the original proof of concept showed that tool worked better than any other tool we had to hand, this spread to being used by more and more systems until effectively all incoming logs in the company were being analyzed by the system and the development support team (SRE's by another name) used it to help chase down some rather pernicious bugs.

We kept using RELK until we had moved all of our grocery clients over to GKE and were using StackDriver instead at that point.

HAProxy API gateway

- Migrated the legacy WSO2 API gateway to use HAProxy instead. This saved over $100K per year in licensing fees alone and greatly improved uptimes and on-call engineer happiness.

In early 2015 I joined the DMS (Digital Marketing Services) team at MyWebGrocer, they provided backend advertising and product information API services to the MyWebGrocer SAAS grocery platform. I joined as their systems engineer was retiring and they needed someone to maintain their Linux systems.

They used a fairly complicated API gateway from WS02 that was constantly breaking, to the point that at least one engineer had quit the team because of the constant pages during on-call weeks. I spent a few weeks before the prior systems engineer left learning the WS02 system and was quickly convinced that it was vastly overcomplicated (Not to mention overpriced!) for the job and created a quick proof of concept using HAProxy as an API gateway but the prior systems engineer simply would not hear of replacing WS02.

So we soldiered on with the WS02 API gateway and were working on containerizing it after he retired when we experienced an outage maybe two weeks later with the WS02 API gateway that we simply could not figure out how to fix and that their support were unable to help us with. About 12 hours into the all hands on deck outage I pulled out the HAProxy proof of concept that I had created and said:

How about we try this instead?

None of us had any better ideas at the time so we pulled an all nighter and worked on fully integrating HAProxy into the DMS APIs solution and had everything working again within 24 hours of the beginning of the outage.

With HAProxy in the mix outages became a thing of the past and because everything was containerized we could quickly scale the solution as needed (Which we did need on Super Bowl Sundays!). We did end up purchasing commercial licenses for HAProxy so that we could take advantage of their excellent technical support and get a few features, such as rate limiting, that were not in the open source version at that time. However compared to the cost of the WS02 solution we saved about $100K a year on licensing alone and the total system resource footprint was about half that of the WS02 solution, so we saved there too.

When later talking to the HAProxy product manager Baptiste, he mentioned that we were the first company he knew of to use HAProxy as an API gateway. To me using HAProxy as an API Gateway was just a logical next step at the time, there really was no need of a monster API gateway tool such as the WS02 API gateway that we had been using. We took the structured logs from HAProxy and fed them via Filebeat into Elasticsearch, and then could analyze the logs and produce reports for management with Kibana. It was just classical UNIX toolchain work really:

Make each tool do one thing well with the output of each tool becoming the input to the next

Baptiste did later write a series of articles on Using HAProxy as an API Gateway but that was several years after we had implemented this.

Using Elasticsearch

- Applied the knowledge gained from RELK to migrating from source/replica MySQL and Microsoft SQL Server databases to a sharded and replicated document store in Elasticsearch for use by the DMS API layer. This cut costs by ~50% and was about 10 times faster.

At that time (early 2016) we had a fairly standard three layer API/cache/database setup with Java based APIs, memcached for caching, with MySQL and Microsoft SQL Server for backend databases.

We started replacing this with Go and Node based APIs with Elasticsearch as the database layer. We found that Elasticsearch was much faster (by as much as 10x!) than the memcached/MySQL combo in general use so we dispensed with the caching layer.

We did still keep a backend MySQL VM for ETL usage and for loading data into Elasticsearch on a periodic basis, but it was able to be a much smaller machine so we saved a lot of resources.

ZFS for Linux

- Implemented migration from LVM to ZFS for data disks on Linux systems, setup automatic data snapshots and replication using zfs send between data-centers on cloud VMs.

At that time (early 2016) we did not have a full disaster recovery except to recover from offline backups so I started implementing the ZFS file system on our cloud Linux VMs and this gave us the capabilities of automated data integrity scrubs, regular data snapshots and inter data-center replication of database data to a "cold standby" system where only the ZFS service was running.

Thankfully we never had a disaster where we had to use the replica but we slept better knowing it was there.

I've run ZFS by default on my local Fedora workstation ever since then. I had been using ZFS on my physical illumos (OpenSolaris) and macOS servers at home before we implemented ZFS on the Linux VMs so I did have a reasonable amount of experience with ZFS at that time, just not on Linux.

Evangelized Docker

- Evangelized Docker and was the driving force behind migrating several critical internal systems to Docker in early 2014, starting only 1 year after the initial release of Docker.

As I recall a coworker sent me an email in May 2014, with an article from The Register mentioning Docker and Googles home grownlmctfy(Let Me Contain That For You). This was the first I had heard of Docker (Which was then just about 1 year after its initial release) and I quickly saw its potential. I was already familiar with BSD jails and Joyent's zones on Solaris so I quickly understood that a tool that could implement a similar philosophy on Linux systems would have widespread implications for DevOps.

After testing a few fairly basic apps I tried something a bit more complex, the staging instance of Atlassian's FishEye/Crucible code review tool for SVN. Since I was the sysadmin for that app (and our on-prem Jira and SVN instances) I had considerable leeway to try this out on a system such as this that really wouldn't affect anyone except myself if I broke it. I only used the staging instance to test new app versions/patches when they became available in any case so even if it completely broke everything no-one except myself would be any the wiser.

Well as you can imagine it did work well and I soon had a set of containers for the staging instances of Atlassian FishEye/Crucible, JIRA and SVN. I persuaded the engineering manager to start us on the migration to Git at this time as it was pretty obvious that most of the tooling for Docker was orientated towards Git and SVN really wasn't going to be supported. While I attempted to get some traction for GitHub we ended up purchasing a license for an on-prem instance of Bitbucket Server (Stash) which was pretty inevitable since we were an Atlassian shop at the time.

Around this time I hosted a meeting with the engineering leads at MyWebGrocer and demonstrated how Docker and containers in general worked. I recall saying:

One of these days everything we run here will run this way

I recall a few barely stifled laughs around the room at the time, but the CTO at the time, who was sitting beside me, agreed with me. I think I had the last laugh on that though as by mid 2024 we had retired the last legacy VMs and everything else was indeed running in containers.

Our production instance of Bitbucket Server started its life in a set of Docker containers (A Percona based MySQL backend, the Bitbucket Server app server, an nginx reverse proxy and an nginx based sorry server for maintenance messages) living in a CentOS 7 VM inside an ESXi cluster. The containers and their backing data on a set of ZFS volumes was eventually migrated to a Google Cloud VM before being finally decommissioned in mid 2024 as we transitioned to GitHub Enterprise Cloud.

Mentoring interns

- I played an active part in mentoring interns at MyWebGrocer into going beyond what they themselves thought themselves capable of achieving. The interns I mentored directly credited me with gaining both the hard and soft skills necessary to get excellent jobs straight out of college.

-

Stephen (2014/2015) is a software engineer on the PSFC fusion reactor project at MIT and previously worked at Docker.

- Stephen was never officially assigned to me as an intern but gravitated towards me as a mentor as I was working on the sort of stuff (Docker) that interested him far more than what he was officially assigned (WordPress).

-

Will (2015/2016) has worked as a DevOps/SRE engineer with Cisco and DataRobot.

- Will was also not originally assigned to me as an intern but his temporary desk was right behind mine in a corridor and I could plainly see that he had no work to do so I asked him if he wanted to implement some tooling that I was wanting to try but did not have the bandwidth to implement due to higher priority commitments. He eagerly agreed and you can read more on this in the RELK section above. Will ended up getting a job with Cisco due to his experience with regular expressions used for pattern matching in the RELK stack.

-

Nidhi (2017) is a software engineer at Dartmouth Hitchcock.

- Nidhi was an intern on the DMS team at MyWebGrocer and was the first intern that I assigned a Linux (running Fedora 24 as I recall) laptop to from day one. At the end of the summer we gifted her the laptop (it was an older model) and she re-imaged it with the latest Fedora release so that she could continue her learning path on Linux.

-

Some of the skills that I taught to them were:

- How to effectively (and safely) manage servers and application deployments.

- Teaching of good habits such as extensive note taking and record keeping.

- How to research and evaluate technologies and tools.

- How to analyze an environment and plan for improvement and future scaling.

- How to socially network with the best and brightest employees.

-

There were no interns under Mi9 Retail / Wynshop.

-

IDX Systems Corporation / GE Healthcare

Principal Systems Engineer - 2002 to 2013

I started working at IDX Systems Corporation in South Burlington, Vermont in 2002.

GE Healthcare purchased IDX Systems Corporation in late 2005.

I specialized in automation, virtualization, storage and source control management for radiology applications.

Introduced Perforce

- Introduced Perforce to replace SourceUnSafe for source control.

As part of the creation of scripted golden images for Windows I needed a revision control system that could handle $ chars in the filenames. At Kodak I had used ClearCase for this but the price of ClearCase was way out of our budget for this so I looked around at the state of the source control market and found that Perforce would work perfectly for this task and better yet it was free for up to 5 users, even in a commercial setting. While initially I only ran the Perforce server instance on my local workstation, I quickly became convinced that it was a superior solution to the SourceUnSafe solution we were using at the time and when the matter of replacing SourceUnSafe came up a year or two later Perforce easily won the competition to replace SourceUnSafe.

The SourceUnSafe moniker came from an incident on the first day of the migration when the consultant we had brought in was able to access the SourceUnSafe files without the password. He simply dryly commented that it was in no way safe...

We introduced Perforce to many other development teams at GE Healthcare and had thousands of users on the system by the time I left GE Healthcare in 2013.

Perforce in 2012 was far ahead of GitHub in 2024 as far as its system management goes. For a system where security is necessary Perforce wins hands down every time. It's definitely one of my favorite pieces of software that I ever managed.

Introduced VMware

- Introduced VMware virtualization for dev and test environments.

Initially all testing of the radiology apps was done on bare metal systems, while the scripted golden images greatly helped with much faster provisioning of the systems it simply wasn't as fast or versatile as we really needed.

After seeing a demonstration of VMware ESX we started planning a migration to VMware ESX during the 2.x release but ultimately didn't purchase it until VMware ESX 3.0 was released in mid 2006.

We went on to manage multiple virtual environments for dev and QA with several hundred VMs in total.

Introduced NetApp storage

- Introduced NetApp storage for NFS and block (FC and iSCSI) storage.

Concurrent with the move from bare metal to VMware we also needed a shared storage array to take advantage of the host migration capabilities of VMware. After much research we purchased a NetApp R200 array and it turned out to be the most reliable single system that I've ever used. Even when we had a (non-redundant) CRAC fail one night in the R&D datacenter the NetApp kept running and serving data, while many other systems failed and shutdown or blue-screened.

After GE Healthcare bought IDX Systems we bought a few other NetApp arrays as NetApp was the preferred storage array at GE.

Introduced Tintri storage

- Later introduced Tintri storage to replace NetApp NFS/SAN for virtualization storage.

While we were generally very happy with our NetApp storage arrays the biggest complaint was latency. There is an I/O blender effect in all large shared arrays of the time and NetApp arrays were certainly not immune from this effect.

We spent a considerable amount of time looking at competitors SSD based arrays as NetApp were dragging their feet on providing an SSD based array, but did not find any that we liked until we came across a very new vendor named Tintri. Their CEO got on the sales call and very quickly and succinctly explained how their array worked in such a way that I was 100% confidant that they would meet our requirements.

Our most essential requirement was that the array have anti-bitrot mechanisms in place, which is a core feature of every NetApp, and surprisingly few vendors could prove that they supported such a mechanism.

The Tintri array was about $85K in 2011, while that's certainly not an outrageous price for a departmental storage array it was from a very new vendor, I think they had sold maybe 10 arrays at that point, and I was warned that if it failed I'd probably be out of a job. Big companies such as GE don't tend to move the needle very far when buying IT equipment. The phrase: "Nobody Gets Fired For Buying IBM" certainly got dropped a few times. So I decided that we should spend a few thousand dollars extra and get the full install service package just to be safe and not mess up anything during install. As I recall three Tintri employees showed up with the box (It was a 3U array) and after racking it, there were maybe 5 questions in the setup UI and it was online. I was like: Was that it?

And their response was: Yeah, go ahead and copy a VM over and see how it goes, then we can go to lunch.

Needless to say I was pretty incredulous, it had not been long since I had spent two full days with a pair of engineers from EMC getting one of their arrays online in our lab and at the end of that two days it still wasn't able to take snapshots, so at that point I sent the EMC engineers packing along with their hunk of junk array.

But true to their word everything did just work with the Tintri array. Within a few weeks we had migrated most of our VMs to the Tintri and freed up a bunch of space on the NetApp arrays. That one little 3U array was replacing about 60U of NetApp disk trays!

Afterwards I half joked to the sales rep that my six year old could have installed the array. He responded with:

If you put that up on YouTube I'll give you a free array!

Unfortunately the lawyers stepped in and stopped that. But I have no doubt that my then six year old, and now Eagle Scout, could have done it.

We ended up buying multiple Tintri arrays for different R&D physical locations. They were very definitely a game changer in supporting VMware.

Created scripted golden images

- Created scripted golden images for factory and field deployment of GE Healthcare Centricity RIS and PACS systems.

I created scripted golden images for factory and field installation of Windows Server 2000 through 2008 R2, SQL Server and the GE Healthcare Centricity RIS and PACS software on HP and IBM physical servers and later VMware VMs.

Systems Environment Specifications

- Maintained the Systems Environment Specifications document for GE Healthcare Centricity RIS and PACS systems.

I maintained the Systems Environment Specifications document and its associated bill of materials documents and diagrams.

The Systems Environment Specifications document was the core rules of what we expected from customers in order to deploy our RIS and PACS systems in their environment.

The bill of materials documents and diagrams defined the build process to the system integration factory floor as to where each component was to be placed within the system and how the system was to built for delivery to the customer.

Provided level 4 support

- Provided level 4 support for GE Healthcare Centricity RIS-IC and PACS product lines. Frequently traveled to customer sites across the USA to resolve issues that had risen to executive level.

When issues arose at customer sites that were unable to be resolved by the extensive network of GE field engineers or by the remote support staff located in the same building I was in, I was frequently sent onsite to resolve the issue. These issues were typically visible at the highest levels of the organization, as in the CEO of the hospital had called the CEO of GE Healthcare IT and chewed him out. I'd then get sent to fix the issue with minimal notice if it was something that we felt I could fix onsite. I got pretty good at calming customers down and walking them through the problem and showing them exactly what I changed to resolve the issue.

Lockheed Martin

This section is not in the one page PDF resume but I'm including it here for context.

I worked as a contractor at Lockheed Martin for a few months in 2002.

Lockheed Martin - Dallas TX

2002

3D visualizations

- 3D visualizations for radar training systems

I was hired as a contractor working on unclassified 3D visualizations of the proposed mechanical designs of the training radar stations of the P3 Orion anti-submarine warfare system.

I don't know if the image below is of the training system or the real workstation on the P3, as the training system was designed to be as close to the real thing as possible, except of course that the radar data being displayed was being piped in by an SGI workstation instead of a radar antenna.

Image source

Eastman Kodak Health Imaging

This section is not in the one page PDF resume but I'm including it here for context as Eastman Kodak was the first Fortune 500 (#124 in FY 2000) company I worked for and is where I learned a lot from some very smart people.

Systems Engineer

Eastman Kodak - Dallas TX

Specializing in production and development support

1997 - 2002

Provided level 3 support

- Provided level 3 support of Kodak PACS systems on Windows.

I was originally hired as a level 3 tech support contractor providing Windows NT specific support for a new generation of Kodak PACS (Picture Archive and Communication System) viewing systems. The prior PACS viewer systems were Solaris and Macintosh based so the tech support team needed a lot of hand holding to become fully self sufficient with the new Windows NT based systems.

While I spent much of my time directly training/assisting the level 2 tech support technicians, I also wrote dedicated support software to assist the level 2 team to connect to, diagnose and troubleshoot remote PACS viewing systems.

Most connections were via Telnet on a shared modem bank, and all connection logs were saved automatically by the support software so that we could review any issues later.

I spent three years in this role and met my wife while she was translating a support call from Argentina that I was assisting with.

Security audit

- Performed security audits and enhancements of the Kodak PACS systems.

After leaving the level 3 support role I was offered another contract to evaluate the security and provide security enhancements for a new Windows based PACS server and web based viewer. At the end of that contract I was offered a full time systems engineer position in the R&D organization at Eastman Kodak Health Imaging.

Source control manager

- Maintained source control (SourceUnSafe and ClearCase) and build pipeline.

One of the first tasks I had as a new systems engineer in the R&D organization was to take over the management of the existing team specific SourceUnSafe instance and the build pipeline.

Later on I was chosen as the site admin for ClearCase and was sent for a month of training on ClearCase and then worked on migration of the SourceUnSafe instances to ClearCase.

Created scripted golden images

- Created scripted golden images for factory and field deployment of Kodak PACS systems.

I created scripted golden images for factory and field installation of Windows Server 2000, SQL Server and the Kodak PACS server software on the chosen hardware (IBM Netfinity 5500 servers). We would use this image internally for weekly rebuilds of the physical systems for QA testing etc.

Bear in mind that VMware 1.0 wasn't released until 2001 so VMs really weren't a thing yet back then.

Setup Kodak PACS at Intel

- Setup Kodak PACS servers in the Intel datacenter

I traveled to Santa Clara CA to setup a set of Kodak PACS servers in the Intel datacenter directly across the street from their HQ (Robert Noyce building at 2200 Mission College Blvd). The servers were to be used by Cedars-Sinai Medical Center in Los Angeles as an early web based PACS proof of concept.

It was really cool to visit the place where CPUs got their start.

See: Kodak, Cedars-Sinai to pilot ASP PACS model

FAQ

AWS experience

I don't have any production AWS experience. I have used it personally but not enough to say I have any real experience with it compared to my experience with Azure or GCP.

It simply wasn't realistic for MyWebGrocer/Wynshop to use AWS, hence why we went with Azure and later GCP. Had the sales team went into a grocery business trying to sell them a solution that ran on AWS they would have very quickly found themselves being shown the door. While Amazon has struggled to find marketshare in the grocery business they are not giving up and traditional grocery chains are not counting them out.